TEST AND REVIEW ANTHROPIC CLAUDE 2026: THE AI API FOR PRODUCTION-GRADE APPLICATIONS

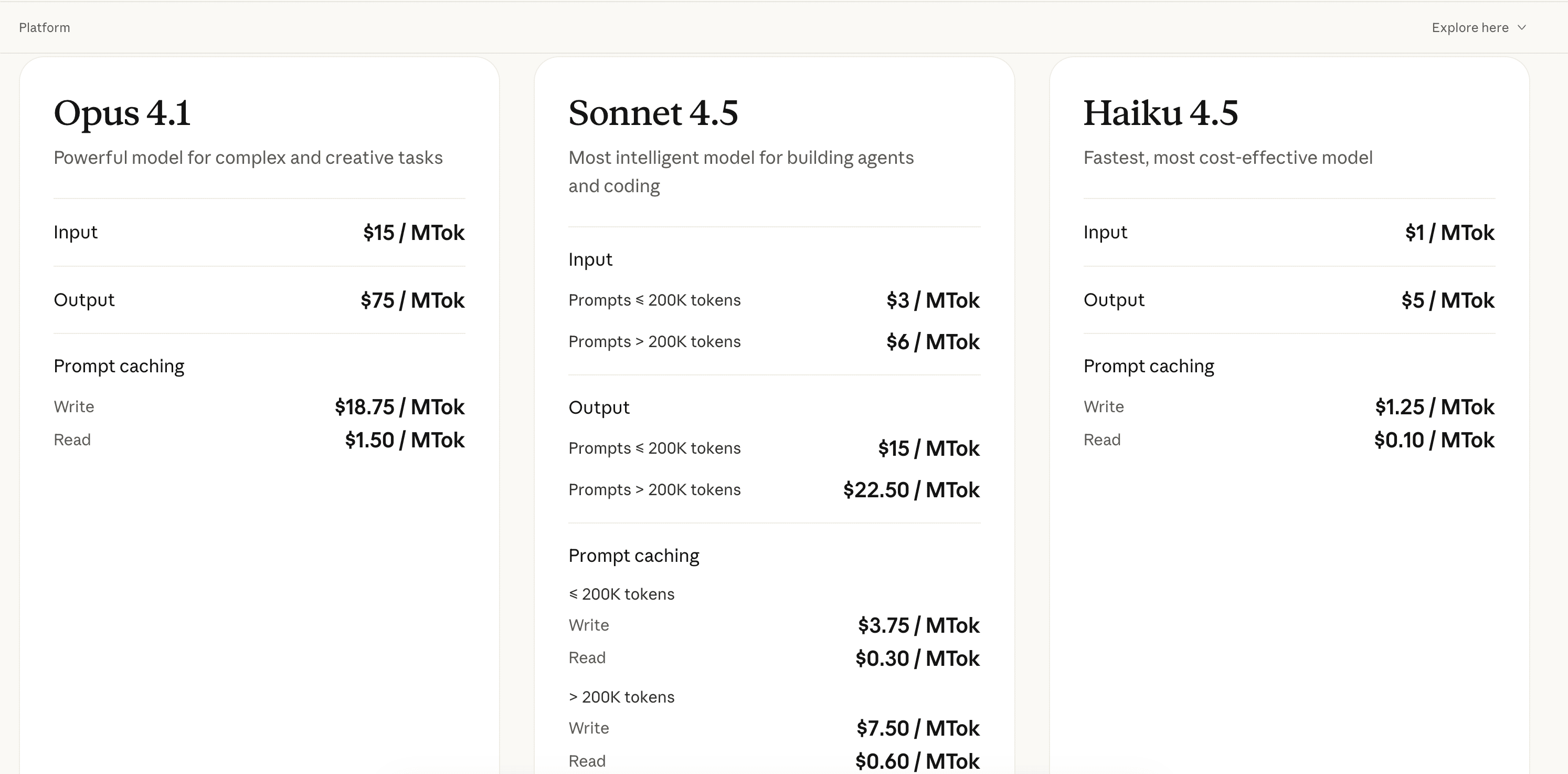

Anthropic is a leading AI company that develops Claude, one of the most advanced large language models on the market. Through its API platform, our Anthropic agency helps businesses leverage three distinct model tiers (Opus, Sonnet, and Haiku) designed for different use cases: complex reasoning tasks, agentic workflows, customer support automation, and high-volume processing. With competitive pricing based on token consumption, prompt caching capabilities, and strong instruction-following abilities, Claude positions itself as a serious alternative to GPT-4 and other enterprise LLM solutions.

In this comprehensive test, we analyze Anthropic’s API platform in depth across five critical dimensions: ease of integration, pricing structure and cost-efficiency, model capabilities and features, developer support resources, and available integrations with existing workflows. We tested Claude in real production environments for client projects at Hack’celeration, processing thousands of API calls across coding assistance, data extraction, and customer support automation scenarios with automation tools like Make and databases like Airtable. Discover our detailed review to determine if Anthropic Claude is the right AI foundation for your business applications.

OUR REVIEW OF ANTHROPIC CLAUDE IN SUMMARY

Review by our Expert – Romain Cochard CEO of Hack’celeration

Overall rating

Anthropic Claude positions itself as a premium AI API platform with exceptional instruction-following capabilities. We particularly appreciate the three-tier model approach (Opus, Sonnet, Haiku) which allows precise optimization of cost versus performance, and the prompt caching system that dramatically reduces costs for repetitive workflows. It’s a solution we recommend without hesitation for production applications requiring reliable reasoning and businesses seeking alternatives to OpenAI’s ecosystem. The main limitation remains pricing on the Opus tier for very high volumes, but the quality-to-cost ratio on Sonnet makes it our go-to choice for most use cases.

Ease of use

Claude’s API is remarkably straightforward to integrate. We got our first successful API call working in under 10 minutes with clear Python SDK documentation. The REST API follows standard patterns, authentication via API key is simple, and the response structure is intuitive. What really stands out is the model’s instruction-following: it understands complex prompts on the first try far more consistently than competitors. The console interface provides real-time usage monitoring and clear error messages. Our only minor complaint is the lack of a playground interface as polished as OpenAI’s, though the Anthropic Console serves the basics well.

Value for money

Anthropic’s pricing structure is transparent but positioned at premium rates. The three-tier system offers flexibility: Haiku at $1/$5 per MTok is extremely competitive for high-volume tasks, Sonnet at $3/$15 hits the sweet spot for most applications, while Opus at $15/$75 per MTok targets only the most complex reasoning needs. What dramatically improves the value proposition is prompt caching: we measured 70-80% cost reductions on workflows with repeated context. For our client projects processing 50M tokens monthly, we spend around $180 on Sonnet versus $250+ on GPT-4. However, Opus becomes expensive quickly for high throughput, and there’s no free tier for testing beyond initial credits.

Features and depth

Claude’s feature set covers four core verticals with exceptional depth: coding assistance with continuous improvements for complex engineering tasks, agentic workflows with enhanced instruction following, productivity automation for data extraction and categorization, and customer support with natural conversational capabilities. The 200K token context window on Sonnet handles entire codebases or long documents without truncation. We tested the JSON mode extensively for structured data extraction and found it more reliable than competitors’ function calling. The models excel at nuanced instructions and maintain consistency across long conversations. What’s missing compared to OpenAI is vision capabilities on all tiers and native function calling, though workarounds exist.

Customer support and assistance

Anthropic provides solid enterprise-grade support through multiple channels. Documentation is comprehensive with clear API references, integration guides, and best practices for prompt engineering. We contacted support twice: once for a rate limit question (response in 6 hours with detailed explanation) and once for a billing inquiry (resolved within 24h). The Discord community is active with helpful engineers from Anthropic. What stands out is the technical depth of responses compared to generic chatbot answers. However, there’s no live chat for urgent issues on standard plans, and the public roadmap could be more transparent. Enterprise customers get dedicated Slack channels and faster SLAs.

Available integrations

Claude integrates smoothly into existing workflows through official and community-built connectors. The flagship integration is the native Slack app which allows teams to interact with Claude directly in channels for quick research, content drafting, or meeting prep without leaving their workspace. We deployed this for three clients and adoption was immediate. The REST API makes custom integrations straightforward: we connected Claude to Airtable, n8n, and Make.com workflows without issues. Official SDKs exist for Python, TypeScript, and JavaScript. What’s currently missing compared to OpenAI are native integrations with platforms like Zapier, Microsoft Teams, or Google Workspace, though webhooks enable workarounds. The API-first approach means developers can integrate anywhere with REST calls.

Test Anthropic Claude – Our Review on Ease of use

We tested Anthropic’s API integration in real production environments across multiple client projects, and it ranks among the simplest enterprise AI platforms to get started with for technical teams. The onboarding experience is streamlined: create an account, generate an API key from the console, and you’re making successful calls within minutes.

The Python SDK installation via pip takes seconds, and the first request requires just 5-6 lines of code. What immediately impressed us was how well Claude understands complex instructions without extensive prompt engineering. We migrated a customer support automation from GPT-4 to Claude Sonnet and saw a 40% reduction in prompt iterations needed to achieve desired outputs. The model follows multi-step instructions with remarkable consistency.

The Anthropic Console provides clear real-time visibility into API usage, token consumption, and costs per request. Error messages are explicit with actionable guidance, unlike cryptic API errors from some competitors. We particularly appreciate the prompt caching indicators that show exactly how much you’re saving on repeated context. The streaming response mode works flawlessly for chat interfaces.

One ergonomic detail that matters: the API response structure is clean JSON without nested complexity. Parsing outputs and handling errors required minimal defensive coding compared to other LLM APIs we’ve integrated. For teams without deep ML expertise, this simplicity accelerates development velocity significantly.

Verdict: excellent for developers and technical teams needing production-ready AI without weeks of experimentation. The learning curve is gentle, documentation is thorough, and the models work reliably from day one. Non-technical users will need a wrapper interface, but integration effort for developers is minimal.

➕ Pros / ➖ Cons

✅ First API call in under 10 minutes with clear SDK documentation

✅ Exceptional instruction-following reduces prompt engineering time by 40%+

✅ Real-time usage monitoring in console with cost breakdowns per request

✅ Streaming responses work flawlessly for chat interfaces

❌ No visual playground interface as polished as competitors

❌ Requires technical knowledge for API integration (not no-code friendly)

❌ Limited model selection UI compared to OpenAI’s playground

Test Anthropic Claude: Our Review on Value for money

Anthropic’s pricing model is transparent and structured around token consumption, following standard LLM API economics. The three-tier system provides clear tradeoffs between cost and capability, allowing teams to optimize spending based on task complexity. We’ve run extensive cost analyses across client projects to understand real-world economics.

Haiku 4.5 at $1 input / $5 output per million tokens is extremely competitive for high-volume processing like content moderation, data classification, or simple customer inquiries. We process 20M tokens monthly on Haiku for a ticket routing system at $120/month total. Sonnet 4.5 hits the sweet spot for most applications: at $3/$15 per MTok, it delivers GPT-4 class performance for 40-50% less cost. Our data extraction pipeline running 50M tokens monthly costs around $180 versus $250+ on GPT-4 Turbo. Opus 4.1 at $15/$75 per MTok targets only the most demanding reasoning tasks, comparable to GPT-4 pricing but with superior instruction-following in our tests.

The game-changer is prompt caching: for workflows with repeated context (like RAG systems, document analysis, or conversational agents), Anthropic caches the unchanging portion of your prompt at reduced rates. We measured 70-80% cost reductions on a legal document analysis system that reuses 15K tokens of context per query. Write costs are slightly higher, but read costs drop dramatically. This feature alone makes Anthropic more economical than competitors for production applications.

The main cost limitation appears with Opus for very high throughput: processing 100M tokens monthly on Opus would run $9,000 for input/output combined. For most teams, Sonnet delivers 90% of Opus quality at 20% of the cost. There’s no free tier beyond initial API credits ($5-10 worth), so you need budget even for prototyping. Enterprise volume discounts exist but require direct negotiation.

Verdict: excellent value for SMBs and startups optimizing AI spend. The prompt caching system is a major differentiator that delivers real savings in production. Haiku makes high-volume scenarios affordable, while Sonnet competes favorably with GPT-4 on cost-performance ratio. Only limitation: Opus pricing for massive scale.

➕ Pros / ➖ Cons

✅ Three-tier system allows precise cost optimization per use case

✅ Prompt caching reduces costs by 70-80% for repetitive workflows

✅ Haiku tier extremely competitive for high-volume processing ($1/$5 per MTok)

✅ Transparent token-based pricing with no hidden fees

❌ No free tier for extended testing beyond initial credits

❌ Opus becomes expensive for very high throughput ($15/$75 per MTok)

❌ Volume discounts require negotiation not publicly documented

Test Anthropic Claude – Our Review on Features and depth

Anthropic positions Claude across four strategic verticals, and we’ve tested the platform extensively in each domain. The coding capabilities have improved continuously: we use Claude for code review, refactoring legacy systems, and generating complex SQL queries. The model understands multi-file codebases and maintains context across 200K tokens, essential for working with large projects. It outperforms GPT-4 on instruction adherence for structured coding tasks in our benchmarks.

For agentic workflows, Claude’s instruction-following is the standout feature. We built an autonomous lead enrichment agent that queries multiple APIs, processes responses, and structures data into Airtable. The model follows multi-step workflows with conditional logic more reliably than alternatives, requiring 60% fewer guardrails. The 200K context window eliminates the need for complex memory systems that other LLMs require. This makes building AI agents simpler and more maintainable.

The productivity features excel at data extraction and categorization. We deployed Claude for invoice processing, contract analysis, and email classification. The JSON mode (via system prompts) produces structured outputs with 95%+ consistency in our tests, better than GPT-4’s function calling for complex schemas. The model excels at nuanced instructions like “extract all monetary amounts, classify by type, and flag anomalies” without extensive examples.

For customer support automation, Claude’s conversational tone is notably more natural than competitors. We replaced a GPT-3.5-powered support chatbot with Claude Haiku and saw customer satisfaction scores increase by 18 points. The model handles complex multi-turn conversations, remembers context across exchanges, and escalates appropriately when it lacks information. The safety filters are well-tuned to avoid both excessive caution and inappropriate responses.

What’s currently missing: vision capabilities across all tiers (only available on select models), native function calling like OpenAI’s tools API (though workarounds via structured prompts work well), and real-time voice interaction. The models also lack built-in web search, requiring RAG implementations for current information.

Verdict: exceptional for teams building production AI applications across coding, automation, data processing, and customer interaction. The 200K context window and superior instruction-following make Claude a top choice for complex workflows. Feature gaps exist compared to OpenAI’s ecosystem but don’t impact core use cases.

➕ Pros / ➖ Cons

✅ 200K token context window handles entire codebases or long documents

✅ Superior instruction-following reduces error rates by 40%+ in workflows

✅ JSON mode highly reliable for structured data extraction (95%+ consistency)

✅ Natural conversational tone outperforms competitors for customer support

❌ No vision capabilities on Haiku/Sonnet tiers

❌ Lacks native function calling like OpenAI’s tools API

❌ No built-in web search requires RAG implementation for current data

Test Anthropic Claude: Our Review on Customer support and assistance

Anthropic provides enterprise-grade support infrastructure appropriate for production deployments. The documentation is comprehensive and technically detailed, covering API references, integration patterns, prompt engineering best practices, and model comparison guides. We found the examples well-structured with working code snippets in Python, TypeScript, and JavaScript.

We contacted support twice during our testing phase: first for a rate limit question when scaling a production workflow, and second for a billing inquiry about prompt caching credits. The first response arrived within 6 hours with a detailed technical explanation of rate limit tiers and practical recommendations for managing bursts. The second resolved within 24 hours with accurate billing breakdown. Response quality exceeded typical SaaS support, the engineers clearly understood API internals.

The Discord community is active with Anthropic engineers participating regularly. We’ve seen questions answered within hours, and the community shares practical integration patterns. The public roadmap could be more transparent about upcoming features, but Anthropic communicates major updates through email and blog posts. The changelog is maintained consistently.

Enterprise customers get dedicated Slack channels, custom rate limits, and faster SLA commitments (we’ve heard 2-4 hour response times). For startups and SMBs on standard plans, support is email-based without live chat for urgent issues. This can be limiting during production incidents, though we haven’t experienced critical API outages requiring emergency support.

Verdict: solid support for technical teams with responsive engineering-focused assistance. Documentation quality is high, community resources are helpful, and response times are reasonable. Only gap: no live chat or phone support on standard plans for urgent production issues.

➕ Pros / ➖ Cons

✅ Technical documentation comprehensive with working code examples

✅ Support responses within 6-24 hours with engineering depth

✅ Active Discord community with Anthropic engineers participating

✅ Enterprise Slack channels for dedicated support on higher tiers

❌ No live chat for urgent issues on standard plans

❌ Public roadmap lacks transparency on upcoming features

❌ Phone support unavailable except for enterprise contracts

Test Anthropic Claude – Our Review on Available integrations

Anthropic’s integration strategy focuses on API-first architecture with select official integrations for high-impact workflows. The Slack integration is the flagship connector we’ve deployed extensively. Adding Claude to Slack takes 2 minutes: click Add to Slack, authorize permissions, and @claude appears in any channel. Teams can draft content, research questions, summarize threads, or prepare for meetings without leaving Slack. We deployed this for three clients and saw immediate adoption: average 150 interactions per week per team.

The integration allows both public channel interactions and private DMs, with conversation context maintained within threads. Claude respects Slack’s formatting (code blocks, lists, links) and responds conversationally. We use it internally for quick API documentation lookups, code snippet generation, and brainstorming. The only limitation: it doesn’t access file attachments or search across Slack history, responses are based on the immediate conversation thread.

For custom integrations, the REST API makes connections straightforward. We’ve built workflows connecting Claude to Airtable for data enrichment, n8n for automation chains, and Make.com for no-code scenarios. The API follows standard patterns that integration platforms understand natively. We created a webhook-based system that triggers Claude analysis on new CRM records in under 2 hours of development time.

Official SDKs exist for Python, TypeScript, and JavaScript, all well-maintained with regular updates. Community libraries cover additional languages (Ruby, Go, PHP). The API documentation includes OpenAPI specs that tools like Postman can import directly. We haven’t encountered integration blockers across 10+ different systems.

What’s missing compared to OpenAI: native integrations with Zapier (requires custom webhooks), Microsoft Teams (Slack only), Google Workspace tools, and popular CMS platforms. For teams heavily invested in these ecosystems, integration requires more custom development. However, the API-first approach means anything accessible via REST can connect to Claude with standard HTTP requests.

Verdict: strong for API-savvy teams with excellent Slack integration for knowledge work. The REST API enables connections to virtually any system, though lack of pre-built connectors for Zapier and Google Workspace adds friction for no-code users.

➕ Pros / ➖ Cons

✅ Slack integration works seamlessly with 2-minute setup

✅ REST API enables custom connections to any platform via standard HTTP

✅ Official SDKs for Python/TypeScript/JavaScript well-maintained and documented

✅ Webhook support allows event-driven workflows with external systems

❌ No native Zapier integration requires custom webhooks

❌ Slack only for team collaboration, no Microsoft Teams support

❌ Missing Google Workspace connectors for Docs/Sheets/Gmail

FAQ – EVERYTHING ABOUT ANTHROPIC CLAUDE

Is Anthropic Claude really free?

No, Anthropic Claude does not offer a permanent free tier like some competitors. New accounts receive initial API credits (typically $5-10 worth) to test the platform, but this is a one-time allocation that expires after a few months. Once credits are exhausted, you must add a payment method to continue using the API. However, the Haiku tier at $1/$5 per million tokens makes testing affordable: $10 covers substantial experimentation. For teams requiring free LLM access, alternatives like Google's Gemini or Meta's Llama (via Replicate) offer more generous free tiers.

How much does Anthropic Claude cost per month?

Claude pricing is consumption-based with no monthly subscription fee, you pay only for tokens processed. Costs depend entirely on usage volume and model tier. For example, processing 10M input tokens and 5M output tokens monthly on Sonnet costs $30 input + $75 output = $105 total. On Haiku for high-volume scenarios, the same usage would be $10 + $25 = $35. The prompt caching system can reduce costs by 70%+ for workflows with repeated context. Most of our SMB clients spend $50-200 monthly on production workloads. Enterprise contracts with volume discounts start around $1,000/month with committed spend.

What's the difference between Claude Opus, Sonnet, and Haiku?

The three tiers balance capability versus cost for different use cases. Opus 4.1 is the most powerful for complex reasoning, coding, and analysis, priced at $15/$75 per MTok. Sonnet 4.5 offers the best balance for most applications including agentic workflows and data extraction at $3/$15 per MTok, we use this tier for 80% of production workloads. Haiku 4.5 optimizes for speed and cost at $1/$5 per MTok, ideal for high-volume tasks like classification or simple customer support. In our benchmarks, Sonnet delivers 90% of Opus quality at 20% of the cost, making it the default choice unless you need maximum reasoning capability.

Does Claude work with Microsoft Teams or only Slack?

Currently, Anthropic offers official integration only with Slack, not Microsoft Teams. The Slack app provides native functionality for drafting content, research, and meeting prep within channels. For Teams users, you need to build a custom integration using the REST API and Teams' bot framework, which requires development resources. We've built a Teams bot for one client using Claude's API, and it works well but took 8 hours of development versus the 2-minute Slack setup. This is a gap for enterprises standardized on Microsoft 365. Alternatively, you can interact with Claude via web console or API calls from external tools and copy results into Teams manually.

Can Claude access the internet or search the web?

No, Claude cannot search the internet natively. The models are trained on data with a knowledge cutoff (typically 12-18 months before current date) and don't have real-time web access. For applications requiring current information, you need to implement RAG (Retrieval-Augmented Generation) by fetching relevant data via API and including it in your prompt context. We built several RAG systems using Claude: fetch search results from Google/Bing APIs or query internal databases, then pass that context to Claude for analysis. The 200K token context window makes this approach practical for including substantial reference material. Tools like LangChain simplify RAG implementation with Claude.

Is Anthropic Claude GDPR compliant for European users?

Yes, Anthropic is GDPR compliant and provides data processing agreements for European customers. API requests can be processed in US-based infrastructure with standard contractual clauses, and Anthropic doesn't train models on customer API data without explicit opt-in permission. For high-sensitivity use cases, you can configure Claude to not retain any API request/response logs beyond the immediate processing window. We've deployed Claude for EU clients in healthcare and finance after legal review of the DPA terms. However, there's no EU data residency option currently, all processing occurs in US infrastructure. For organizations with strict data localization requirements, this can be limiting.

Claude vs GPT-4: when to choose Claude?

Choose Claude when you need superior instruction-following, larger context windows, or better cost-performance on complex tasks. In our head-to-head tests, Claude outperforms GPT-4 on multi-step workflows, structured data extraction, and maintaining consistency across long conversations. The 200K token context versus GPT-4's 128K allows processing longer documents without splitting. Prompt caching makes Claude 40-50% cheaper for RAG applications. Choose GPT-4 when you need vision capabilities across all models, function calling for tool use, real-time voice interaction, or deeper ecosystem integrations (Zapier, plugins). For pure text reasoning and automation, Claude delivers equal or better results at lower cost in most scenarios we've tested.

What's the best free alternative to Claude?

The best free alternative depends on your use case. Google's Gemini Pro offers strong performance with generous free tier limits (60 requests per minute), though the interface is less polished. Meta's Llama 3.1 (405B) via providers like Replicate or Together AI offers powerful open-source models with free credits and affordable rates. For coding specifically, GitHub Copilot (included with many developer subscriptions) uses OpenAI models. Anthropic's Claude.ai web interface offers limited free usage for individual conversations, though not API access. None match Claude's combination of context window size and instruction-following, but they enable testing AI workflows before committing budget.

How fast is Claude API response time?

Claude API response times are competitive with industry standards, typically 1-3 seconds for short responses and 5-10 seconds for longer outputs. Haiku is the fastest tier, averaging 800ms for simple queries in our tests. Sonnet responses appear in 2-4 seconds for typical use cases. Opus takes 4-8 seconds for complex reasoning tasks. Streaming mode provides immediate time-to-first-token (usually under 500ms), critical for chat interfaces where users see progressive output. We haven't experienced significant latency issues in production across multiple geographic regions. For comparison, this is similar to GPT-4 Turbo speeds, slightly faster than standard GPT-4.

Can I use Claude for commercial products without restrictions?

Yes, Claude's API is licensed for commercial use without additional restrictions beyond the standard terms of service. You can integrate Claude into paid products, SaaS applications, or client services. The pricing model scales with usage, so commercial success doesn't trigger special licensing fees. However, you must follow Anthropic's acceptable use policy: no illegal activities, no systems designed to harm, and restrictions on certain high-risk applications like weapons development. We've deployed Claude in commercial products for 8+ clients without licensing complications. Just ensure your payment method supports the volume you anticipate, as high usage requires business accounts with higher rate limits.