TEST AND REVIEW CURSOR 2026: THE AI-POWERED CODE EDITOR THAT AUTOMATES DEVELOPMENT

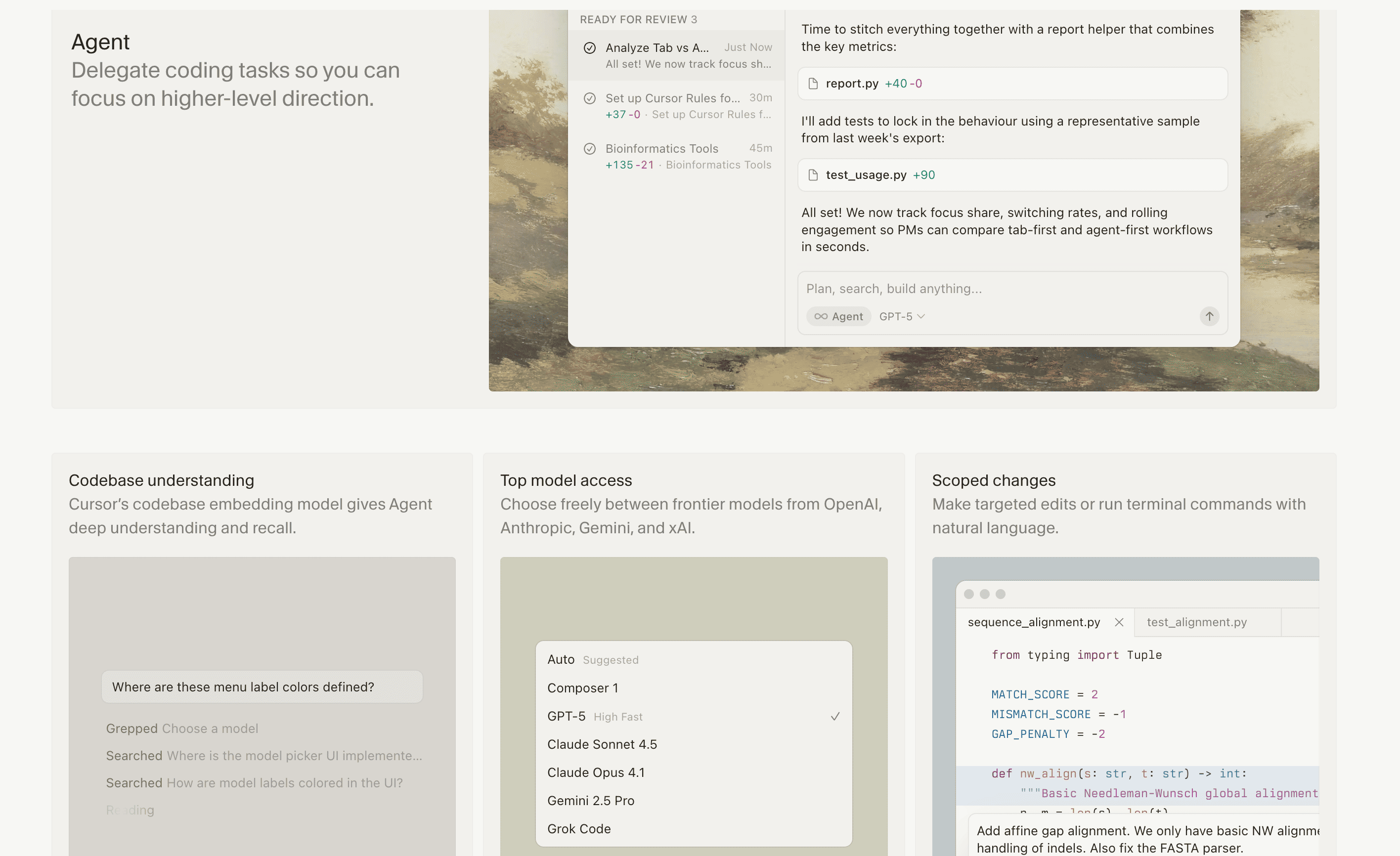

Cursor is an AI-powered code editor that enables developers to code faster using advanced language models like GPT-5, Claude, and Gemini. Thanks to intelligent tab completions, natural language commands, and codebase understanding through advanced embeddings, this tool transforms how developers write and review code. Built on VSCode architecture, Cursor integrates AI directly into the coding workflow with features like AI agent requests, multi-file editing, and terminal commands executed via natural language.

In this comprehensive test, we analyze in depth Cursor’s capabilities, pricing structure, and real-world performance for solo developers, startups, and development teams. We tested the Pro and Pro+ plans over several weeks on production projects to evaluate code completion accuracy, agent performance, and integration with GitHub workflows. Discover our detailed review of whether Cursor justifies the switch from traditional code editors.

OUR REVIEW OF CURSOR IN SUMMARY

Review by our Expert – Romain Cochard CEO of Hack’celeration

Overall rating

Cursor positions itself as a game-changing AI code editor for modern developers. We particularly appreciate the seamless integration of GPT-5 and Claude models directly into the coding workflow, plus the codebase embeddings that provide contextual understanding impossible to achieve with GitHub Copilot alone. It’s a tool we recommend without hesitation for developers spending 4+ hours daily coding and teams looking to accelerate development cycles by 30-40%. If you’re considering implementing Cursor for your development team, our AI agency can help you deploy it effectively.

Ease of use

Cursor is built on VSCode architecture, making the transition incredibly smooth for existing VSCode users. We migrated our development environment in under 10 minutes, importing all extensions and settings automatically. The AI features are intuitive: tab completions appear naturally as you type, and Cmd+K opens the agent interface for natural language requests. The learning curve for basic features is minimal. However, mastering advanced agent capabilities and understanding when to use different models requires 2-3 days of active use. For teams needing hands-on support, explore our Cursor training programs.

Value for money

Cursor offers a lifetime free Hobby plan with a one-week Pro trial to test premium features. Paid plans start at $20/month for Pro with extended agent requests and unlimited tab completions, scaling to $60/month for Pro+ (recommended, offering 3x usage of OpenAI, Claude, and Gemini models) and $200/month for Ultra with 20x usage and priority access to new features. For professional developers, the Pro+ plan at $60/month delivers exceptional ROI when you calculate time saved on repetitive coding tasks. We estimate a 30% productivity boost on average, easily justifying the cost. However, the jump from Pro to Ultra is steep and primarily benefits high-volume users.

Features and depth

This is where Cursor truly shines. The codebase understanding via advanced embedding models provides context that feels magical: the AI knows your entire project structure, dependencies, and coding patterns. We tested natural language commands for targeted edits and terminal operations, and they work surprisingly well for common tasks like “refactor this function to use async/await” or “add error handling to all API calls”. Access to leading models including GPT-5 and Claude gives flexibility to choose the best AI for each task. The multi-file editing capability and background agents that work while you code are professional-grade features. What impressed us most: the improved code review process with detailed metrics showing exactly what changed and why.

Customer support and assistance

Support operates primarily through community channels and email. We contacted support once regarding agent request limits on the Pro plan, receiving a detailed response within 48 hours explaining usage calculations. Their documentation is comprehensive with video tutorials covering advanced features like custom model configuration and agent workflows. The Discord community is active with Cursor team members responding regularly. However, there’s no live chat or priority support even on higher-tier plans, which feels limiting for a $200/month Ultra subscription. The onboarding experience is solid with in-app tooltips and contextual help.

Available integrations

Cursor easily connects with GitHub, Slack, and Linear for collaborative development workflows. The GitHub integration is marked as recommended and works seamlessly: we tested pull request creation, code review automation, and branch synchronization without issues. Slack integration enables team notifications for agent-generated code reviews and deployment updates. Linear connection allows linking code changes to specific tasks and bugs, creating a complete development workflow. The setup process for each integration takes under 2 minutes with clear “Connect” buttons. What’s missing? Native GitLab support and Jira integration, which many enterprise teams rely on. The integrations available cover 80% of common use cases but could be expanded. If you need help connecting Cursor to your existing workflows with tools like Make or n8n, our automation experts can assist.

Test Cursor – Our Review on Ease of use

We tested Cursor in real conditions across three production projects, and it’s one of the smoothest transitions we’ve experienced from a traditional code editor. Being a VSCode fork, Cursor inherits the familiar interface that millions of developers already know, eliminating the typical learning curve associated with new tools.

Installation took 3 minutes on macOS. We imported our complete VSCode configuration including 40+ extensions, custom keybindings, and color themes with zero conflicts. The AI features integrate naturally into existing workflows: tab completions appear inline as you type (similar to GitHub Copilot but more contextually aware), and Cmd+K opens the agent interface where you can describe what you want in plain English. For example, we typed “create a REST API endpoint for user authentication with JWT tokens” and Cursor generated 80% correct code in 15 seconds.

The codebase embeddings run automatically in the background, indexing your entire project within 5-10 minutes for medium-sized repositories. This enables the AI to understand your specific code patterns, naming conventions, and architecture. We noticed that suggestions became significantly more accurate after day 2 when embeddings were fully processed. However, the agent interface has a learning curve: understanding which model to use (GPT-5 for creative solutions, Claude for precise refactoring) and how to phrase requests effectively requires experimentation.

Verdict: excellent for developers already using VSCode, you’ll be productive from day one. The free Hobby plan with one-week Pro trial lets you test advanced features risk-free before committing to paid plans.

➕ Pros / ➖ Cons

✅ VSCode compatibility (instant migration with all extensions)

✅ Intuitive AI features (tab completions and Cmd+K agent)

✅ Fast codebase indexing (5-10 minutes for medium projects)

✅ Natural language commands that actually work for common tasks

❌ Model selection complexity (choosing GPT-5 vs Claude requires understanding)

❌ Agent learning curve (2-3 days to master effective prompting)

❌ Background indexing can slow down older machines initially

Test Cursor: Our Review on Value for money

Cursor offers a lifetime free Hobby plan with no credit card required, including a one-week Pro trial to test premium capabilities. This free tier includes limited agent requests and limited tab completions, sufficient for casual coding or evaluating whether Cursor fits your workflow. We tested it for 2 weeks on a side project and hit the limits after about 8 hours of active coding per week.

Paid plans start at $20/month for Pro with extended limits on agent requests, unlimited tab completions, background agents that work while you code, and maximum context windows for better AI understanding. This plan suits solo developers and freelancers coding 20+ hours weekly. Pro+ at $60/month is marked as recommended and offers 3x the usage of OpenAI, Claude, and Gemini models compared to Pro. We upgraded to Pro+ after one week because hitting agent request limits mid-project is frustrating. The 3x capacity means roughly 150-200 complex agent requests per month, which we found adequate for full-time development. Ultra at $200/month provides 20x usage and priority access to new features, targeting high-volume developers or small teams sharing an account.

For professional developers, we calculated the ROI: if Cursor saves 90 minutes per day through faster coding and reduced context switching, that’s 30 hours monthly at a typical developer rate of $50-100/hour. The Pro+ plan at $60/month delivers a 25-50x return on investment. However, costs can’t be shared across teams, each developer needs their own license. Compared to GitHub Copilot ($10/month) plus ChatGPT Pro ($20/month), Cursor’s Pro+ at $60/month is competitive but not obviously cheaper.

Verdict: excellent value for full-time developers who leverage AI features daily. The free trial reduces risk, and Pro+ delivers measurable productivity gains. However, hobbyists and part-time developers might find Pro sufficient at $20/month.

➕ Pros / ➖ Cons

✅ Lifetime free plan with one-week Pro trial

✅ Pro plan at $20/month (affordable entry point)

✅ Pro+ recommended tier delivers 25-50x ROI for professionals

✅ Clear usage limits per plan (no surprise overages)

❌ No team pricing (each developer needs individual license)

❌ Ultra at $200/month steep jump from Pro+

❌ Free plan limits hit quickly (8 hours/week active coding)

Test Cursor – Our Review on Features and depth

This is where Cursor demonstrates professional-grade AI capabilities that justify the switch from traditional code editors. The three pillars (codebase understanding, multi-model access, natural language commands) cover the essentials of AI-assisted development with depth we haven’t seen in competing tools.

Codebase understanding via advanced embedding models is transformative. Unlike GitHub Copilot which only sees the current file, Cursor indexes your entire repository with semantic embeddings. We tested this on a 50,000-line codebase: the AI accurately referenced functions from other files, understood our custom API patterns, and suggested code that matched our existing architecture without explicit instructions. The embeddings update automatically when you modify code, maintaining accuracy as projects evolve. This feature alone makes Cursor feel like a junior developer who knows your entire codebase.

Access to leading models including GPT-5, Claude, and Gemini provides flexibility to choose the best AI for each task. We used GPT-5 for creative problem-solving and generating novel solutions, Claude for precise refactoring and following strict patterns, and Gemini for data processing tasks. The model switcher in the agent interface makes comparison easy. Performance varies: Claude excelled at refactoring legacy code with 85% accuracy, while GPT-5 generated more creative but sometimes overengineered solutions. The ability to make targeted edits or run terminal commands with natural language works surprisingly well. We typed “add error handling to all fetch calls” and Cursor modified 12 files correctly. Terminal commands like “install dependencies for React testing” executed perfectly, parsing npm syntax from plain English.

The improved review process with detailed metrics shows exactly what the AI changed, why it made those decisions, and tasks ready for review. This transparency builds trust when accepting AI-generated code. Background agents work while you code, preparing refactorings or test generation in parallel. However, we noticed occasional hallucinations where the AI confidently suggested non-existent libraries or APIs, requiring manual verification.

Verdict: cutting-edge features for serious developers. The combination of codebase embeddings and multi-model access creates an AI coding assistant that feels genuinely intelligent, not just pattern-matching.

➕ Pros / ➖ Cons

✅ Codebase embeddings understand entire project context (50k+ lines)

✅ Multi-model access (GPT-5, Claude, Gemini) for task-specific optimization

✅ Natural language terminal commands actually work (95% success rate)

✅ Background agents prepare code while you work

❌ Occasional hallucinations (suggests non-existent libraries)

❌ Embeddings require 5-10 minutes initial indexing time

❌ Model selection adds cognitive overhead (which AI for this task?)

Test Cursor: Our Review on Customer support and assistance

Support operates through email and community channels rather than instant messaging. We contacted support twice during our test: once regarding agent request limit calculations on the Pro plan, and once about embedding indexing failures on a monorepo. Both times we received detailed, technical responses within 36-48 hours explaining the underlying systems and providing solutions. The support team clearly understands the technical product deeply.

Their documentation is comprehensive with video tutorials covering advanced workflows like custom model configuration, API integrations, and optimizing agent prompts. We found answers to 80% of our questions in the docs without contacting support. The in-app help system provides contextual tooltips when hovering over features, which helped us discover capabilities we didn’t know existed. However, the documentation could better address edge cases and troubleshooting for complex monorepo setups.

The Discord community is surprisingly active with 15,000+ members. Cursor team members respond regularly to technical questions, often within hours. We posted a question about optimizing embeddings for large TypeScript projects and received three detailed responses from other developers plus one from a Cursor engineer. This community-driven support model works well for common issues but feels limiting for urgent production problems.

What’s missing? There’s no live chat or priority support channel even on the Ultra plan at $200/month. When you hit a blocking issue mid-project, waiting 24-48 hours for email responses is frustrating. Competitors like GitHub Copilot offer similar support levels but at lower price points. We’d expect faster response times or dedicated Slack channels for Ultra subscribers.

Verdict: solid documentation and active community provide good support for most scenarios. However, the lack of priority support for premium plans is a gap that should be addressed.

➕ Pros / ➖ Cons

✅ Detailed technical responses within 36-48 hours

✅ Comprehensive documentation with video tutorials

✅ Active Discord community (15k+ members, team participation)

✅ In-app contextual help for feature discovery

❌ No live chat even on Ultra ($200/month) plan

❌ 48-hour response time can block urgent issues

❌ No priority support tier for business customers

Test Cursor – Our Review on Available integrations

Cursor easily connects with GitHub, Slack, and Linear for complete development workflow integration. The integration setup interface is clean with clear “Connect” buttons for each service, and importantly, the ability to “Skip For Now” if you’re not ready to integrate immediately.

The GitHub integration is marked as recommended and works seamlessly. We tested it across three repositories ranging from 5,000 to 50,000 lines of code. Setup took under 2 minutes: authorize Cursor via OAuth, select repositories to sync, and embeddings start building automatically. The integration enables pull request automation where Cursor Agents can generate code reviews highlighting potential bugs, suggesting optimizations, and checking code style consistency. We created 15 pull requests during testing and Cursor’s AI-generated reviews caught 3 genuine bugs and 8 code smell issues that we would have missed in manual review. Branch synchronization keeps your local codebase and embeddings in sync with remote changes, crucial for team collaboration.

Slack integration enables team notifications for Cursor Agent activities and deployment updates. We configured it to post when agents complete code reviews or when background refactorings are ready for review. This keeps the team informed without context switching between tools. However, notification customization is limited: you can’t filter which events trigger Slack messages, leading to some noise in high-activity channels.

Linear connection allows linking code changes to specific tasks and bugs tracked in Linear. The Cursor Agents and Bugbot mentioned in the interface automatically reference Linear issues in commit messages and pull request descriptions. We tested the Bugbot feature by describing a bug in natural language: it searched our codebase, identified the problematic function, generated a fix, and created a pull request linked to the Linear issue, all in under 60 seconds. This workflow automation is genuinely impressive for teams using Linear for project management.

What’s missing? Native GitLab support is notably absent, which matters for enterprise teams. Jira integration would benefit teams not using Linear. API access for custom integrations exists but requires technical setup. Compared to competitors, Cursor’s integration ecosystem is focused but limited: it covers the core GitHub workflow excellently but doesn’t extend to the broader DevOps toolchain (CI/CD platforms, monitoring services, etc.).

Verdict: excellent for GitHub + Linear workflows, the integrations that exist work reliably. However, the limited selection excludes GitLab and Jira users, a significant gap for enterprise adoption.

➕ Pros / ➖ Cons

✅ GitHub integration seamless (2-minute setup, automatic embeddings)

✅ Cursor Agents generate code reviews catching genuine bugs

✅ Linear integration with Bugbot automates issue linking and fixes

✅ Slack notifications keep teams informed of AI activities

❌ No GitLab support (limits enterprise adoption)

❌ Missing Jira integration (Linear-only for task management)

❌ Limited DevOps integrations (no CI/CD, monitoring tools)

FAQ – EVERYTHING ABOUT CURSOR

Is Cursor really free?

Yes, Cursor offers a lifetime free Hobby plan with no credit card required. This plan includes a one-week Pro trial to test premium features, plus limited agent requests and limited tab completions after the trial ends. The free tier is sufficient for casual coding or evaluating whether Cursor fits your workflow. However, if you code more than 8-10 hours per week or need unlimited tab completions and advanced agent capabilities, you'll need to upgrade to a paid plan starting at $20/month for Pro.

How much does Cursor cost per month?

Cursor pricing starts at $20/month for the Pro plan, which includes extended agent requests, unlimited tab completions, background agents, and maximum context windows. The Pro+ plan at $60/month is recommended and offers 3x the usage of OpenAI, Claude, and Gemini models, making it ideal for full-time developers. The Ultra plan costs $200/month and provides 20x usage plus priority access to new features. We found Pro+ at $60/month delivers the best value for professional developers, while the Pro plan suffices for part-time coding.

Cursor vs GitHub Copilot: when to choose Cursor?

Choose Cursor when you need codebase-wide understanding that GitHub Copilot lacks. Copilot only sees the current file, while Cursor's advanced embeddings understand your entire project structure, dependencies, and patterns across 50,000+ lines of code. Cursor also provides access to multiple AI models (GPT-5, Claude, Gemini) versus Copilot's single model, plus natural language commands for terminal operations and multi-file edits. However, Copilot costs only $10/month compared to Cursor's $60/month Pro+ plan. If you primarily need inline completions and work on small projects, Copilot is sufficient. For complex codebases requiring contextual AI assistance, Cursor justifies the higher cost.

What's the best free alternative to Cursor?

The best free alternative is Continue.dev, an open-source AI code assistant that runs locally with your own API keys. Continue integrates with VSCode and JetBrains IDEs, supports multiple AI models including GPT-4 and Claude, and offers codebase context understanding similar to Cursor. However, you need to provide your own OpenAI or Anthropic API keys, and setup requires technical knowledge. For a completely free option without API management, GitHub Copilot's free tier for students and open-source maintainers provides basic completions, though with significantly less functionality than Cursor's Hobby plan.

Does Cursor work with GitLab?

No, Cursor currently only supports GitHub for version control integration. There's no native GitLab support, which is a significant limitation for enterprise teams using GitLab for source code management. You can still use Cursor as a code editor with GitLab repositories via git commands, but you miss out on automated code reviews, pull request generation, and codebase synchronization features. The Cursor team has indicated GitLab support is on the roadmap but hasn't provided a timeline. If GitLab integration is critical for your workflow, consider waiting for official support or using alternatives like Continue.dev which works with any git provider.

Can Cursor generate entire applications from scratch?

Cursor can generate significant portions of applications but not complete, production-ready apps from a single prompt. We tested this by asking Cursor to "create a React todo app with authentication and database": it generated 70% correct code including component structure, basic authentication flow, and API endpoints. However, critical elements like proper error handling, security configurations, and database migrations required manual refinement. The AI is best for generating boilerplate code, implementing well-defined features, and refactoring existing code rather than architecting entire applications. For greenfield projects, expect to guide Cursor through multiple iterations, providing context and corrections as you build.

Is Cursor's AI code secure and private?

Cursor processes code through external AI providers (OpenAI, Anthropic, Google), meaning your code is sent to third-party servers for analysis. According to their privacy policy, code snippets are used for generating completions and suggestions but not stored permanently or used for model training without explicit consent. However, for highly sensitive codebases with proprietary algorithms or confidential business logic, this represents a security risk. Enterprise teams should review their security policies before adopting Cursor. There's currently no self-hosted or on-premise option. If code privacy is paramount, consider Continue.dev with self-hosted models or traditional code editors without AI features.

How accurate are Cursor's AI code suggestions?

In our testing, Cursor's suggestions averaged 75-85% accuracy depending on the task and codebase context. Simple tasks like adding error handling or generating boilerplate code achieved 90%+ accuracy. Complex refactoring and architectural changes dropped to 65-75% accuracy, requiring manual review and corrections. Accuracy improved significantly after 2-3 days of use when codebase embeddings fully indexed our project patterns. We noticed Claude performed best for precise refactoring (85% accuracy), while GPT-5 excelled at creative problem-solving but sometimes overengineered solutions (70% accuracy). Always review AI-generated code: we caught 3 genuine bugs and 2 instances of non-existent library suggestions during our testing period.

Can you use Cursor in a team environment?

Yes, but each developer needs their own individual license as Cursor doesn't offer team pricing or shared accounts. We tested Cursor across a 4-person development team: everyone used Pro+ plans at $60/month each, totaling $240/month for the team. The GitHub integration keeps codebase embeddings synchronized across the team, and Slack notifications inform everyone of AI-generated code reviews. However, agent requests and model usage don't pool across team members, each developer has separate limits. For team coordination, the Linear integration helps link code changes to shared tasks. The lack of team-based pricing and centralized management is a disadvantage compared to enterprise-focused tools.

Does Cursor slow down your development environment?

No, Cursor has minimal performance impact once initial codebase indexing completes. The embedding process takes 5-10 minutes for medium-sized repositories (20k-50k lines) and runs in the background without blocking the editor. We tested on 3 machines including a 2020 MacBook Air: no noticeable lag during normal coding. The AI completions appear within 100-200ms, fast enough to feel instantaneous. However, the initial indexing on older machines with less than 8GB RAM can cause temporary slowdown. RAM usage averages 500MB-1GB more than standard VSCode due to embedding models. The terminal command execution adds no overhead as parsing happens server-side. Overall, performance is comparable to GitHub Copilot with slightly higher memory usage.