APIFY N8N INTEGRATION: AUTOMATE APIFY WITH N8N

Looking to automate Apify with n8n? You’re in the right place. The Apify n8n integration gives you access to 1 trigger and 12 actions that let you connect your web scraping and data extraction workflows to hundreds of other applications—without writing a single line of code.

Whether you need to automatically launch actors when certain conditions are met, retrieve datasets on schedule, or chain complex scraping operations with your CRM, email marketing, or database tools, this integration opens up serious automation possibilities. From monitoring actor executions to pulling key-value store records, you’ll find everything you need to build sophisticated data pipelines.

In this guide, we’ll walk through each available trigger and action, explain their parameters in plain terms, and show you how to get the most out of the Apify n8n connection.

Apify n8n Workflow: demonstration of an automation connecting Apify to other applications via n8n. This video illustrates how Apify triggers and actions integrate into a n8n workflow to automate your processes without code.

WHY AUTOMATE APIFY WITH N8N?

The Apify n8n integration gives you access to 1 trigger and 12 actions that transform how you handle web scraping and data extraction workflows. Instead of manually checking actor runs, downloading datasets, or triggering scrapers by hand, you can build automated pipelines that run 24/7 without your intervention.

The benefits are substantial. Time savings hit immediately—no more logging into Apify’s dashboard to check if your actors completed successfully, then manually exporting data to spreadsheets or databases. Set up a workflow once, and it handles everything automatically. Improved responsiveness becomes the norm: the moment an actor succeeds (or fails), your workflow can instantly notify your team, update a CRM record, or trigger a follow-up action. Zero oversight means your scraping jobs run reliably whether you’re asleep, on vacation, or focused on higher-value work.

Concrete workflow examples include: automatically pushing scraped lead data into HubSpot when an actor finishes, sending Slack alerts when scraping jobs fail, syncing Apify datasets with Google Sheets for real-time dashboards, or chaining multiple actors together where one’s output becomes another’s input. The Apify n8n integration connects to over 400+ applications in n8n’s ecosystem, making your scraped data truly actionable.

HOW TO CONNECT APIFY TO N8N?

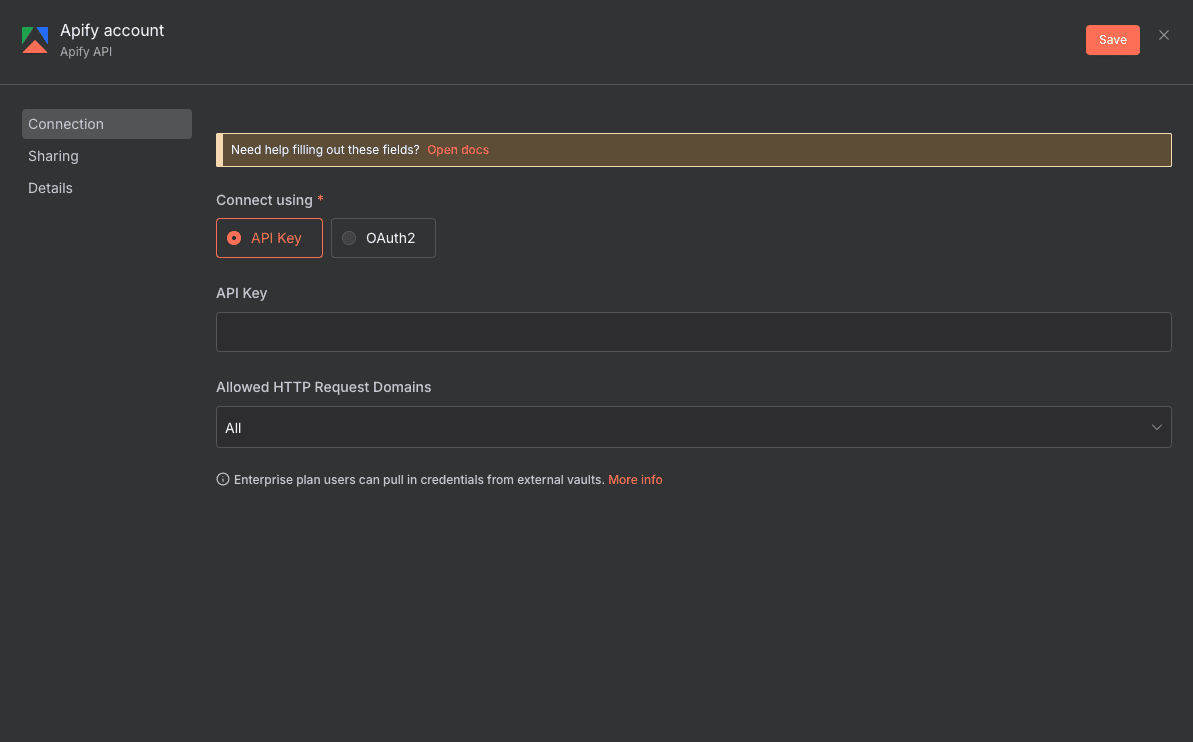

Basic configuration:

- Get your Apify API token: Log into your Apify account, navigate to Settings → Integrations, and copy your Personal API Token. Keep this secure—it grants full access to your account.

- Add credentials in n8n: In your n8n workflow, add an Apify node (trigger or action). Click on “Credential to connect with” and select “Create New.”

- Paste your API token: Enter your Apify API token in the designated field. Give your credential a recognizable name like “Apify – Main Account.”

- Test the connection: n8n will validate your credentials automatically. If successful, you’ll see a confirmation message.

- Start building: Your Apify connection is now ready. You can use it across any Apify trigger or action in your workflows.

💡 TIP: Create separate API tokens for different n8n workflows or environments (production vs. testing). This way, if you need to revoke access to one workflow, you won’t disrupt others. Apify allows multiple API tokens per account, so take advantage of this for better security hygiene.

APIFY TRIGGERS AVAILABLE IN N8N

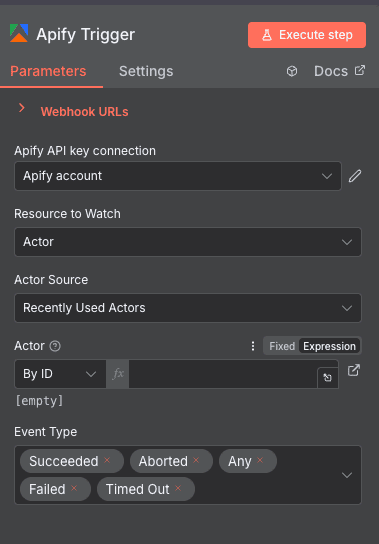

Apify Trigger

The Apify Trigger is your gateway to event-driven automation. This trigger listens for events from the Apify platform related to actor executions, automatically firing your workflow whenever specified conditions are met. Instead of polling for updates or manually checking run statuses, this trigger delivers real-time notifications straight into your n8n workflow.

Configuration parameters: The trigger requires you to select your Apify API Key Connection from a dropdown menu for authentication. You must specify the Resource to Watch (set to “Actor”), choose an Actor Source such as “Recently Used Actors,” and identify the exact Actor you want to monitor. The most crucial parameter is the Event Type dropdown, where you select which events fire your workflow: “Succeeded,” “Aborted,” “Any,” “Failed,” or “Timed Out.”

Typical use cases: Trigger Slack notifications immediately when a scraping actor completes successfully, automatically restart failed actors or escalate to human review when jobs time out, chain multiple actors together where Actor A’s success triggers Actor B to start, or update CRM records and databases the instant new scraped data becomes available.

When to use it: This trigger is ideal when you need immediate response to Apify events. Perfect for time-sensitive scraping jobs, monitoring critical data extraction pipelines, or building complex multi-step automation workflows where timing matters.

NEED HELP AUTOMATING APIFY WITH N8N?

We’ll get back to you in minutes ✔

APIFY ACTIONS AVAILABLE IN N8N

Scrape single URL

The Scrape single URL action is perfect for quick, targeted scraping tasks where you need to extract data from one specific webpage. This action leverages Apify’s powerful crawling infrastructure directly from your n8n workflow.

Key parameters: You must provide your Apify API key connection through a dropdown selection, set the Resource to “Actor,” and specify the Operation as “Scrape Single URL.” The URL parameter requires a valid webpage address in text format, and you must select a Crawler Type from the dropdown menu to define the crawling method.

Use cases: Extract product details from a single e-commerce page, scrape a specific article for content analysis, or pull structured data from any publicly accessible URL as part of a larger workflow.

Run an Actor

Run an Actor gives you full control to execute any Apify actor directly from n8n, passing custom inputs and configuring execution parameters. This is your workhorse action for triggering scraping jobs programmatically.

Key parameters: Authentication requires your Apify API key connection. You select an Actor Source (like “Recently Used Actors”) and specify the exact Actor to execute. Optional parameters include Input JSON for custom runtime data, a Wait for Finish toggle (defaults to false), Timeout in milliseconds, Memory allocation (e.g., “1024 MB”), and Build Tag to target specific actor versions.

Use cases: Launch scheduled scraping jobs from n8n triggers, dynamically pass search parameters based on upstream workflow data, or run different actor configurations based on conditional logic in your automation.

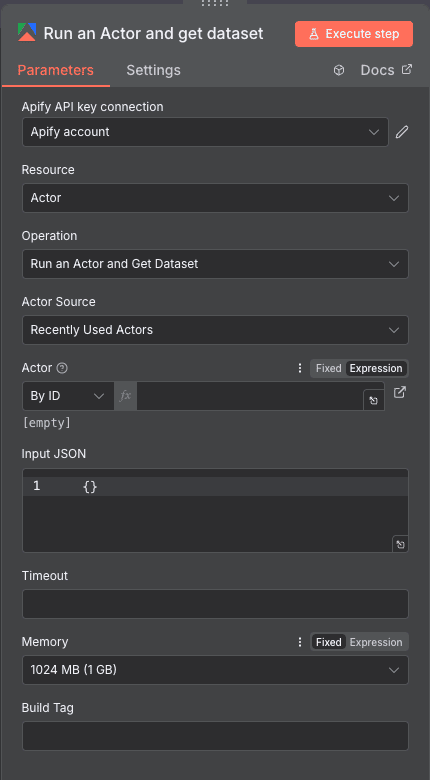

Run an Actor and get dataset

This action combines actor execution with automatic dataset retrieval—run your scraper and get results back in one step. Perfect for workflows that need to immediately process scraped data.

Key parameters: Required parameters include your Apify API key connection, Actor Source selection, and Actor identification (can be by ID). Optional configurations allow Input JSON for custom parameters, Timeout in seconds, Memory allocation in megabytes, and Build Tag for version targeting.

Use cases: Scrape and immediately enrich CRM contacts, extract data and send directly to Google Sheets, or build pipelines where scraped output feeds directly into downstream processing nodes.

Get user runs list

Retrieve a comprehensive list of all your actor runs, with filtering and pagination options. Essential for monitoring, auditing, and building dashboards around your scraping operations.

Key parameters: Requires Apify API key connection and Resource set to “Actor Run.” Optional parameters include Offset (starting point for pagination, defaults to 0), Limit (maximum results, defaults to 50), Desc toggle for including description data, and Status dropdown to filter by execution status like “SUCCEEDED.”

Use cases: Build monitoring dashboards showing recent scraping activity, audit successful vs. failed runs over time, or create alerts when failure rates exceed thresholds.

Run Task and Get Dataset

Execute a pre-configured actor task and retrieve its dataset in one seamless operation. Tasks are saved actor configurations in Apify, making this action ideal for standardized, repeatable scraping jobs.

Key parameters: Authentication via Apify API key connection is required, with Resource set to “Actor Task.” You must select the specific Actor Task to run (by ID or selection). Optional parameters include Use Custom Body toggle, Timeout for maximum execution time, required Memory allocation dropdown, and optional Build specification for actor version control.

Use cases: Run standardized scraping jobs with consistent configurations, execute recurring data extraction tasks, or trigger pre-built tasks from external events.

Get runs

Retrieve detailed information about runs for a specific actor. Use this to monitor individual actor performance or fetch run history for reporting.

Key parameters: Requires Apify API key connection and Resource set to “Actor Run.” You must specify which Actor’s runs to retrieve (by ID or selection). Optional parameters include Offset for pagination (defaults to 0), Limit for maximum results (defaults to 50), Desc toggle for descending sort order, and Status dropdown for filtering by run status.

Use cases: Generate actor-specific performance reports, track run history for compliance purposes, or identify patterns in execution times and outcomes.

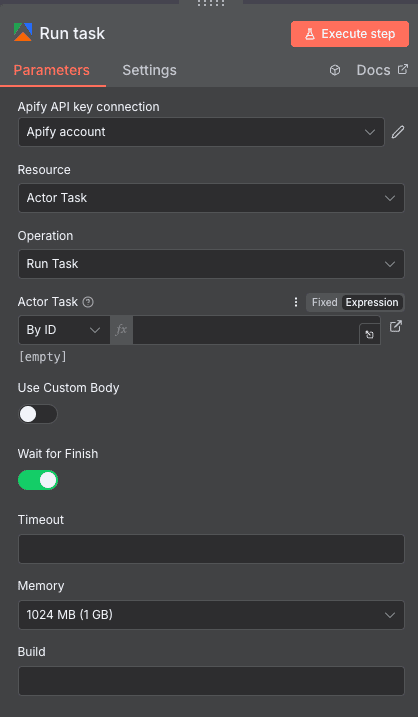

Run task

Execute a saved actor task with full parameter control. Unlike “Run Task and Get Dataset,” this action doesn’t automatically retrieve results—giving you more flexibility in complex workflows.

Key parameters: Required dropdown for Apify API key connection, Resource set to “Actor Task,” and Actor Task specification (by ID). Optional parameters include Use Custom Body toggle, Wait for Finish toggle (defaults off), Timeout in milliseconds, required Memory allocation like “1024 MB (1 GB),” and optional Build number for specific actor versions.

Use cases: Trigger tasks asynchronously without waiting for results, run tasks where dataset retrieval happens later via separate action, or build fire-and-forget scraping workflows.

Get run

Retrieve detailed information about a single, specific actor run using its unique Run ID. Perfect for checking status, debugging, or pulling metadata about completed runs.

Key parameters: Requires Apify API key connection for API access and Resource set to “Actor Run.” The critical parameter is Run ID—a required text input that accepts expressions to identify the specific run you want to retrieve information about.

Use cases: Check status of a previously triggered run, retrieve execution logs for debugging failed jobs, or pull run metadata for reporting and analytics.

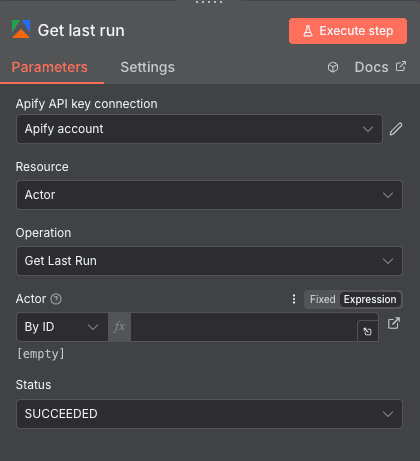

Get last run

Quickly access the most recent run of a specific actor, optionally filtered by status. This shortcut saves you from querying run lists when you just need the latest execution.

Key parameters: Required dropdown for Apify API key connection and Resource set to “Actor.” You must specify the Actor to query (by ID or expression). Optional Status dropdown allows filtering by execution status like “SUCCEEDED.”

Use cases: Get the latest successful scrape results, check if the most recent run completed without errors, or build workflows that always reference fresh data from the last execution.

Get items

Retrieve items from a specific Apify dataset. After running an actor, use this action to fetch the extracted data for processing in your workflow.

Key parameters: Required authentication via Apify API key connection, with Resource fixed to “Dataset” and Operation set to “Get Items.” You must provide a Dataset ID (unique identifier as text input), with optional Offset for pagination starting point and required Limit for number of items to retrieve (defaults to 50).

Use cases: Fetch scraped data for transformation or enrichment, pull dataset contents into other applications like databases or spreadsheets, or paginate through large datasets in chunks.

Get key-value store record

Access specific records from Apify’s key-value stores using their unique keys. Key-value stores are perfect for caching, configuration storage, or passing data between actors.

Key parameters: Required dropdown for Apify API key connection, with Resource fixed to “Key-Value Store.” You must provide a Key-Value Store ID (required, by ID) and a Key-Value Store Record Key (required text input) to retrieve the specific record.

Use cases: Fetch cached scraping results, retrieve configuration data stored in Apify, or access screenshots, PDFs, or other files stored in key-value stores.

NEED HELP AUTOMATING APIFY WITH N8N?

We’ll get back to you in minutes ✔

FREQUENTLY ASKED QUESTIONS ABOUT APIFY N8N INTEGRATION

Is the Apify n8n integration free?

The n8n side is free if you're self-hosting n8n or within your n8n cloud plan limits. However, Apify operates on its own pricing model with a free tier that includes limited compute units per month. When you run actors through n8n, you're consuming your Apify compute credits just as you would running them directly in Apify. For most small to medium scraping operations, Apify's free tier is sufficient to get started. As your automation scales, you'll want to monitor your Apify usage and upgrade as needed.

What data can I sync between Apify and n8n?

The integration lets you work with virtually all Apify data types. You can retrieve complete datasets from scraping runs, access individual key-value store records (which can contain JSON, files, screenshots, or any binary data), pull detailed metadata about actor runs including logs and statistics, and monitor real-time events through triggers. On the input side, you can pass any JSON-formatted data to actors, configure execution parameters dynamically, and trigger tasks with custom bodies. This bidirectional capability means you can both push configurations into Apify and pull results back into n8n.

How long does it take to set up the Apify n8n integration?

Initial setup takes about 5 minutes—just copy your API token from Apify and paste it into n8n's credentials manager. Building your first basic workflow (like triggering a Slack notification when an actor completes) typically takes another 10-15 minutes. More complex workflows involving multiple actors, conditional logic, or data transformations naturally take longer, but the node-based interface makes iteration fast. If you've already designed your actors in Apify, connecting them to n8n is the quickest part of the process.